Technology Tools

Advancing the Next Generation of Learning: ELI 2013

Topics

Educators often take advantage of educational technologies as they make the shifts in instruction, teacher roles, and learning experiences that next gen learning requires. Technology should not lead the design of learning, but when educators use it to personalize and enrich learning, it has the potential to accelerate mastery of critical content and skills by all students.

NGLC staff and grantees walked away from the EDUCAUSE Learning Initiative Annual Meeting in Denver this week filled to the brim with new understanding about the work of next generation learning.

Several conversations, sessions, and keynote speakers at this year’s annual meeting of the EDUCAUSE Learning Initiative, ELI2013, focused on:

- what next gen learning is (you can tell us what you think here),

- how we get there from here, and

- how we know if it works.

Perfect for NGLC, right? In this post, I’m going to focus on the last part – how we know if next generation learning works. Because, as Barbara Means of SRI International reminded us in her presentation Wednesday morning, “If we don’t collect credible data on next generation learning impacts, the ‘winners’ will be those with the best PR.”

The experience of evidence-gathering among NGLC grantees who were awarded grants in our first wave of funding—Building Blocks for College Completion—was shared at several sessions during the conference. Ray Fleming of the University of Wisconsin-Milwaukee shared the strategies used to study the impact of U-Pace (learn more in the ELI Seeking Evidence of Impact case study). Barbara Means shared evidence from U-Pace as well, along with evidence from Cal State University Northridge’s Hybrid Lab Math Courses, and Project Kaleidoscope by Cerritos Community College. She also shared some early findings about all 29 grantees. And in a meetup at the conference, NGLC grantees shared their successes and struggles; evidence-gathering was a common theme in grantees’ comments.

Here are a few key takeaways drawn from our three days in Denver:

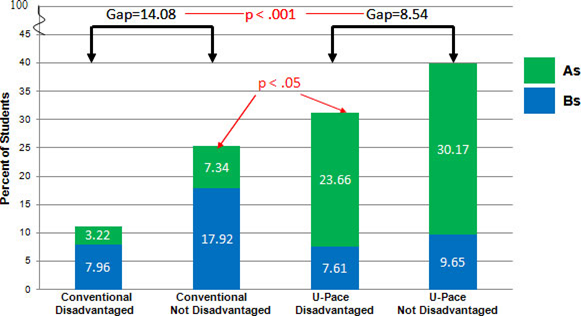

It’s hard to collect meaningful data but it can be done. The “Building Blocks” grantees, challenged to scale their innovations at multiple institutions, found navigating Institutional Review Board (IRB) applications and policies at all those institutions challenging. Random assignment to courses and sharing student-level data are essential for experimental research design but seemed to be the source of many IRB woes. Even when those barriers can be crossed, it’s often difficult to get true comparisons where effects can be attributed to the innovation (as opposed to the instructor, instruction, and student characteristics). Ray Fleming was able to conduct a randomized control study and found some significant effects of U-Pace. (As was his colleague, J. D. Walker of the University of Minnesota, who co-presented on evaluating active learning classrooms.) U-Pace showed positive effects on the percentage of students earning an A or B in the course and on students completing the course, both at the University of Wisconsin-Milwaukee and at two partner campuses.

The “Pancake Rule”—where the first pancake usually is tossed because it never comes out right—can be applied to educational technology innovations as well. They require multiple iterations of testing and improvement before their innovations are developed enough to demonstrate positive outcomes, and judging them on their first iteration cannot be a final statement on their effectiveness.

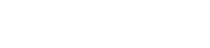

Scale and evidence-building are traditionally slow and incremental in higher education. The success of next generation learning will benefit from adopting the iterative design process of entrepreneurs and the high-tech industry. The mantra of “fail early and often” means that every iteration to improve happens rapidly—leading to better serving students more quickly. At the same time that NGLC grantees were challenged to scale their innovations to multiple institutions, MOOCs (also a big topic of conversation at the conference) caught on like wildfire, scaling exponentially. But MOOCs weren’t designed with course completion in mind and it shows in the evidence: 77% of students using NGLC innovations completed their course compared to 5% of students in MOOCs. But as designers iterate on MOOCs using evidence collected from their millions of users, will their completion rates improve exponentially too?

Define clearly what success means. Just as MOOCs were designed with access rather than completion in mind, it’s important to collect evidence on the right indicators of success. For NGLC “Building Blocks” grantees, success means improved course completion and deeper learning, but each project has other indicators of success specific to their project. For example, Project Kaleidoscope promoted the adoption of open education resources in academic courses to remove the burden of textbook costs particularly for students from underrepresented groups. So, when looking at effects on course completion and persistence for 10 courses in Project Kaleidoscope—three showed positive effects, six showed no difference, and one showed negative effects—the project directors consider success when students do better or the same as they do in comparable courses that don’t use free content.

It’s important to know why an innovation is effective. Knowing that a next gen learning innovation works is important, but it’s most helpful to know which design characteristics and underlying mechanisms influence outcomes. Ray Fleming shared his conclusion, based on analysis of the data, that student engagement and sense of control of learning within the course were mediating factors that led to the successful outcomes found in the research study on U-Pace.

These early findings from NGLC grantees are encouraging. This fall, SRI International will share the results of their full evaluation of the NGLC “Building Blocks” grantees. We all love a compelling story, but next gen learning needs more of the compelling stories that are backed by credible data. It is then that we will know that students are the real winners.

Help Us define Next Gen Learning

Tell us what should be included in the definition of Next Gen Learning here

Download the ELI Presentation Archives

Next-Generation Learning: What is it? And Will it Work? by Barbara Means

Why Intervention and Impact Evaluation is Critical for Improving Student Learning by Ray Fleming and J.D. Walker