Technology Tools

ChatGPT, Schools, and Non-Artificial Intelligence

Topics

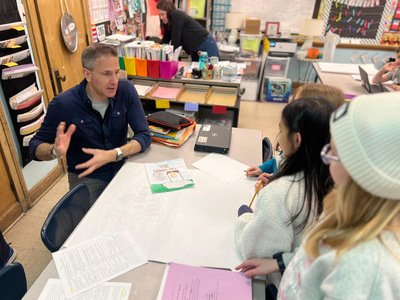

Educators often take advantage of educational technologies as they make the shifts in instruction, teacher roles, and learning experiences that next gen learning requires. Technology should not lead the design of learning, but when educators use it to personalize and enrich learning, it has the potential to accelerate mastery of critical content and skills by all students.

These early questions, hunches, and observations about artificial intelligence and ChatGPT explore what this latest leap forward means for teaching and learning.

Welcome to the future of education, where AI and teachers join forces. While AI provides personalized instruction, teachers mentor and guide students, leveraging AI's data to understand student needs and adapt instruction. Together they provide a holistic learning experience, where the human touch and ethical guidance of teachers complements the customization and efficiency of AI. Join the journey of discovering the new heights education can reach with the perfect blend of human and technology.

I didn’t write this paragraph, ChatGPT did. I told it to “write me a catchy, 80-word introduction about the role of teachers in a world of AI.” (For better or worse the rest of this post is all me, I promise.)

ChatGPT, and AI (Artificial Intelligence) in general, is rapidly replacing COVID as the topic that is bound to come up when three or more educators get together and, well, chat. Like COVID, it affects us all deeply. Also like COVID, most of us have a limited understanding of how it really works. (Also like COVID, too many of us will rush to pretend that we do.)

Personally, I am super early on the learning curve for all of this stuff. I have a conceptual understanding of how AI works but zero background in computer science or machine learning. I have early questions or hunches about what this latest leap forward might mean for teaching, learning, and schools. But mostly, I’m listening to what my colleagues are thinking. Here are a few early questions, hunches, and observations.

Writing simple stuff manually will (and maybe should?) become obsolete.

Take another look at the paragraph above. It succeeds in being about what I asked it to be about. It is also stunningly unoriginal, a mash-up of the things we, collectively, have said about a topic filtered through an algorithm that stands in for tone. And for some purposes, that’s totally fine! Some writing is just the manufacturing of boring words for compliance purposes. Personally, I am happy to let the machines do that stuff, the same way I am happy to build budgets in spreadsheets rather than using an abacus and some parchment.

Schools currently ask students to do an awful lot of simple stuff.

My friend and colleague Aiden Downey recently quipped that schools have long specialized in making students artificially intelligent. He’s right. Schools are especially vulnerable to ChatGPT and the like because they ask students to do so much work that’s formulaic.

Process over product.

As educators, the more we emphasize how students think and work (both in teaching and assessment) instead of what they produce at the end, the less it will matter what technology they are or are not using. In writing, for example, what if we focused on artifacts of students’ thinking, or how a draft changed or improved over time rather than where it ended up? In short: a bunch of little data points instead of one big one.

Asking the right question over getting the right answer.

ChatGPT is very good at following orders, but at least for now (I have seen ALL the sci-fi movies!) it isn’t capable of coming up with them. How do you know what you need written? And what will you do with it once you get it? Can you look at a complex problem and figure out what the right questions are?

Rethinking literacy.

The fact that machines can credibly write for us means we all need to become more critical readers. Not so we can detect what is human-written versus machine-written (there will be AI for that too!), but rather because we need to be able to discern whether what the machines have written actually meets our needs. The paragraph above is cleanly written and it’s about the same thing as the rest of this post, but it’s a lousy introduction. The real thinking lies in knowing why. (I also hope that AI pushes us to broaden the scope of how students can show what they’re learning beyond just reading and writing. There are a lot of ways to know stuff and a lot of ways to show it.)

This technology will revolutionize our society, but that doesn’t mean it will revolutionize our schools.

Historian Larry Cuban has written brilliantly over the years about how almost as soon as we rolled out public education in this country we began making breathless proclamations about how new technology would revolutionize it, usually to comical effect.

If I had to bet, I would say that in the near term, educational institutions will spend more time, energy, and resources fighting AI applications than they will adapting to them. We will likely see an increase in interest in controlled-environment testing and other approaches whose first concern is to prevent “cheating” rather than to promote learning. (After all, for years we’ve had technologies that can solve math problems and schools have mostly focused on keeping them out of the classroom.)

If I had to summarize my very preliminary thoughts on AI in education, it boils down to a question. That question is not whether these technologies will fundamentally change how we learn and work (they will), but whether we can reimagine what school looks like within that new landscape.

Photo at top by Liza Summer on Pexels.